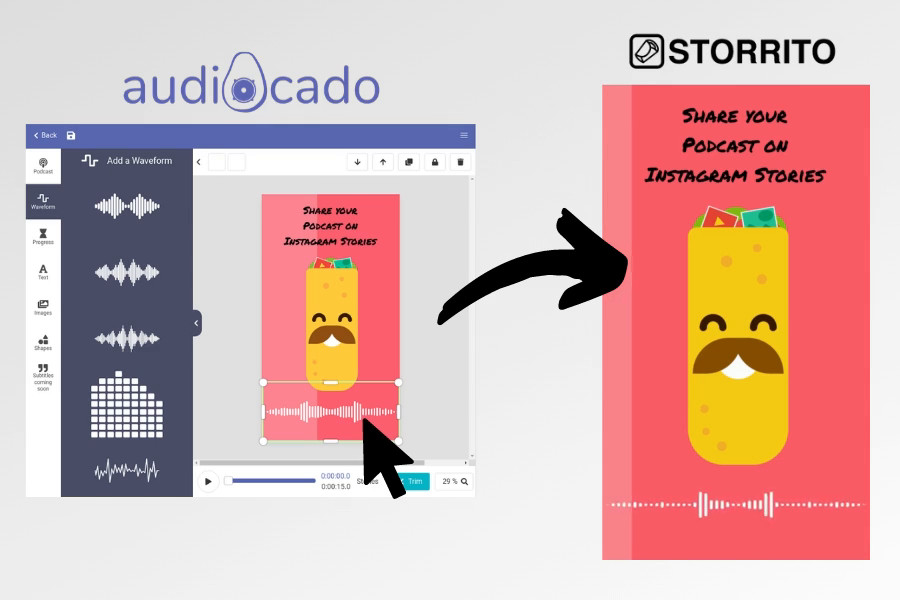

The video editor for podcasters Get unlimited 15-second videos for free

How much accuracy can you expect from Google and Co when they transcribe your podcast

We often tend to overestimate what computers are able to accomplish. On the other hand we underestimate the difficulty of the tasks which we as human perform everyday almost effortlessly. One of these human superpowers is the ability to understand what our fellows are speaking. While this ability seems pretty easy for us, it is incredible difficult for a computer. For decades there was no significant progress in computer science to increase the accuracy of software that tries to recognize the words spoken in an audio recording. I remember my father in the late 90s spending hours speaking to his office computer via a headset to train a new speech recognition software. But even with hours of training the error rate of these software programs were annoying, so that you was better off spending the time to improve your typing speed on the computer keyboard.

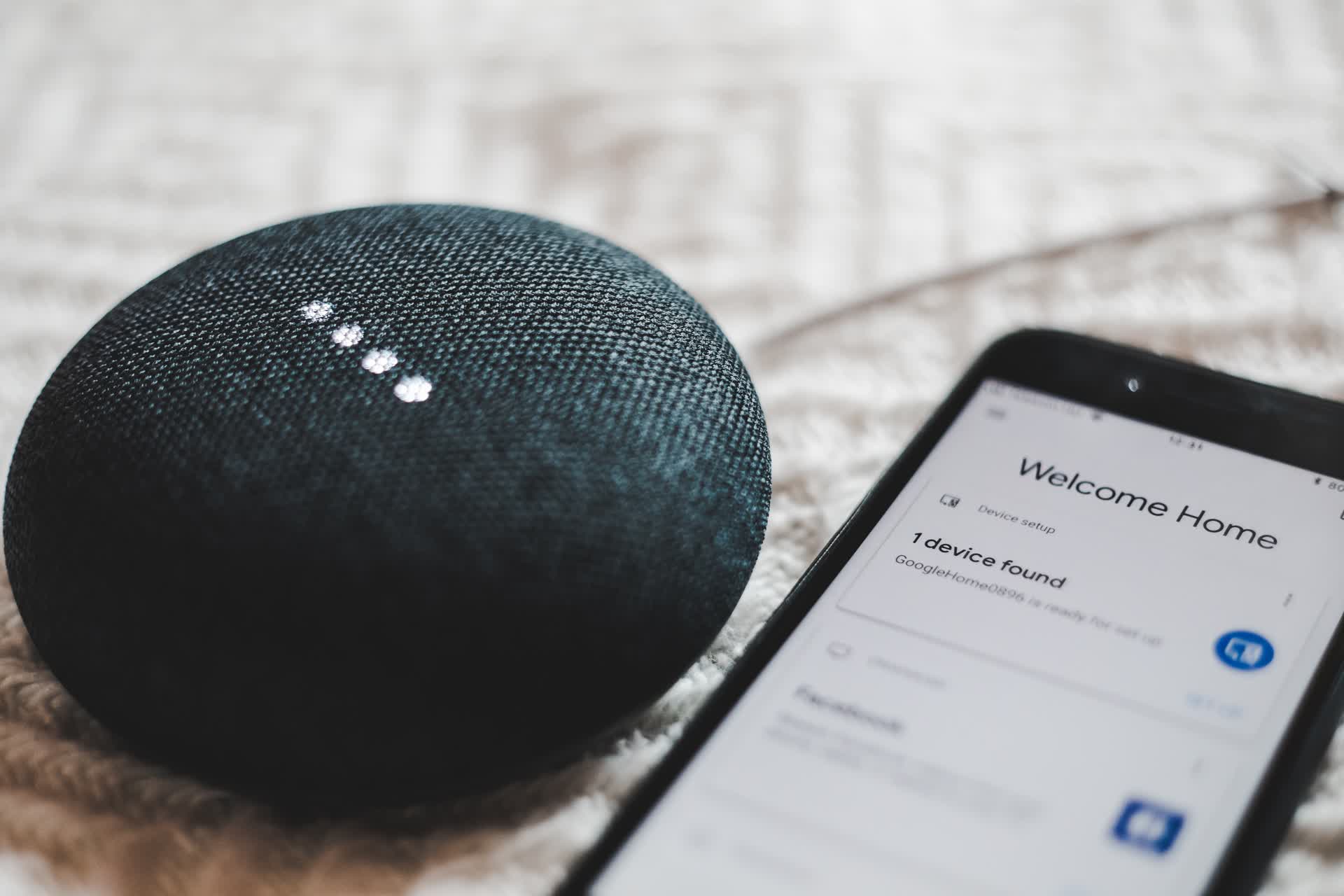

But in the last few years there has been a major breakthrough in this area with the advent of deep learning. All the fancy stuff like Amazon Alexa, Tesla's self driving cars and Google DeepMind's victories over the most advanced Go players and computer gamers, were possible through deep learning.

Photo by BENCE BOROS on Unsplash

Impressed by these recent developments I expected it would be an easy task to find a Google, Amazon or Microsoft cloud service that helps us to transcribe podcasts to generate subtitles for Audiocado's audiograms. You might question my initial optimism, if you are a regular user of a smart speaker like Amazon Alexa or Google Home. Oftentimes I just like to throw my Google Home speaker out of the window, when it just doesn't get, what I like to accomplish.

With my initial optimism I expected that we would transcribe podcasts with an accuracy of over 95%. This would mean that roughly 1 of 20 words is not correctly transcribed. But the reality showed us that this value is rather something around 80%. This would mean that 4 of these 20 words would probably be incorrect. Despite the higher effort to correct a transcription with a 20% error rate, it often becomes difficult to understand these sentences without relisting to the audio.

It seems that we humans will stay superior to the machines for a while, when it comes to transcribing audio. This is also one of the conclusion of this academic paper that compares the accuracy of automatic speech recognition systems (Google Cloud, IBM Watson, Microsoft Azure, Trint, and YouTube). Yes, you have read correctly, YouTube this Google-owned company even won the accuracy comparison performed by the authors of this paper. The authors also tried find correlations between the facial expression of the participants, which were recorded on video (the audio of these videos were used as input for the transcription). They discovered that the smile intensity of the participant was higher, when the dialog partner was intelligible. Besides the advantage to understand our body language and provide the dialog partner with feedback as soon as we were not able to understand what was said, we humans also have the context of the conversation to outperform automatic speech recognition systems, especially for longer audio recordings like podcasts. When you listing to a podcast you will automatically also accumulate knowledge and new vocabulary that was explained by the podcast participants. This context makes it way easier to understand later parts of the conversation in the podcast, since you already understand the new words. Speech-to-text services on the other hand must be fed with this vocabulary upfront to improve the recognition accuracy.

An advantage of these speech-to-text services is their low cost in comparison to a transcription performed by a human. Rev.com is one of the largest services that offers a network of freelancers which transcribe your English audio recordings for $1.25 per audio minute. Foreign languages are even more expensive. While Google only charges $0.024 and Microsoft $0.016 per audio minute.

Another challenge when you rely on humans to transcribe your audio, is speed. Rev.com makes it super simple to find a freelancer that will transcribe your audio, but even them only offer a 12 hour turnaround time. The human transcribers hired by the authors of the academic paper mentioned above took approximately 1 hour to process a 15 min video, while the automatic systems only needed around 15 minutes to process the same video.

Okay fine, so what does this all mean for Audiocado. We like to provide our users with a comfortable way to leverage these speech-to-text services, so that they do not need to transcribe their podcasts manually. Luckily Google and Co already offer these services for a huge number of languages, which are spoken on this planet. But the speech recognition accuracy of other languages is even lower than the one for English. Therefore we also need to provide our users with an interface that allows them to correct the transcription generated by these cloud services.

A few months ago we already had a working version that generated subtitles for the audiogram videos by using Google's Speech-To-Text service. While using Audiocado our self and due to feedback of early adopters, we recognized that we got the data model wrong. With Audiocado you oftentimes create multiple videos for the same podcast to promote it on social media, since Twitter, Facebook, Instagram all have different formats and maximum video length restrictions. For that reason our initial data model yielded the issue that you often needed to generate and correct the same transcription multiple times.

We only stored the subtitles and dropped the information when which word occurs in the audio (this is the data that is normally returned by the aforementioned speech-to-text services). The missing start and end time for each word makes it difficult to rearrange the subtitles, when the user changes the start and end of the audio snippet (selected from the podcast episode). Consequently the reuse of the subtitles for the other social media formats gets tricky very quickly.

Our new version will keep these word timing information and provide an appropriate user interface to edit these words, when the user corrects the transcription. A challenge for us will be to reduce the implementation effort to an acceptable level for our small company. This transcription feature is not our main business, therefore we cannot and will not try to provide the same sophisticated user interfaces like Descript or Trint. But we will led our users experience a little bit of the magic made possible by deep learning, when they transcribe their audio snippet in Audiocado :-)